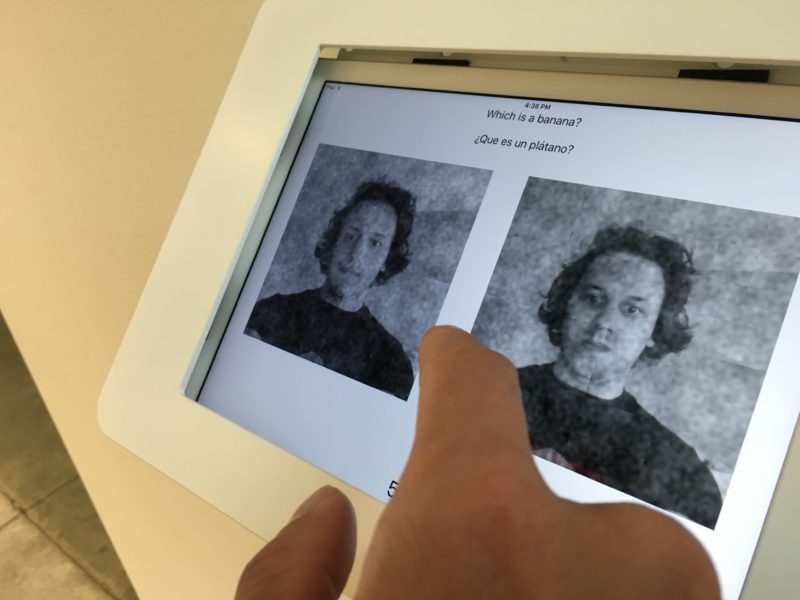

[help me know the truth] is a software-driven participatory artwork in which visitors first snap a digital self-portrait (or “selfie”) at the gallery. The image is then sent around the gallery’s network and appears on digital stations located around the gallery. Using the tools of cognitive neuroscience, the faces are manipulated with noise patterns to literally, through time and user input, ‘construct’ the perfect stereotype.

On digital stations in the gallery, visitors are asked to choose between two slightly altered portraits to match the text label shown. By selecting slight variations of the images over time, differing facial features emerge from what are otherwise random patterns that reveal unconscious beliefs about facial features or tendencies related to culture and identity.

http://maryflanagan.com/work/help-me-know-the-truth/

[help me know the truth] utilizes Reverse Correlation to investigate how psychological responses to people’s faces might uncover both positive and negative reactions to those who visit the gallery. The viewer/participant chooses between two identical selfies, where different computational noise has been applied. The faces appear somewhat blurry, so the viewer/participant chooses one blurry image over another that might match criteria given. The list of prompts for visitors to the gallery ranges from the politically-charged to the taboo: ”Choose the victim;“ falls after “Indicate the leader“ but might lead to the timely, “Select the terrorist.” Other judgements passed by visitors include identifying which face is the most angelic, kind, criminal, etc. Through choosing faces manipulated by particular noise patterns, facial features emerge that reveal larger thoughts and beliefs about how we fundamentally see each other.

Why do people—even internationally—tend to gravitate towards similar stereotypes? Bias against ’the other‘ is a dangerous impediment to a just Twenty-First Century society, in part encouraged by our own neurological structures that have not caught up with our lived realities. Hyper-scale image-based categorization is being deployed in government and surveillance programs worldwide. These processes demand our critical attention. Where do we find the “truth“ about each other this way?

[help me know the truth] raises awareness about the unconscious stereotypes we all carry in our minds, and how these beliefs become embedded in myriad software systems including computer vision programs. My intent is to both utilize and question how computational techniques can uncover the categorizing systems of the mind, and how software itself is therefore subject to socially constructed fears and values. [help me know the truth] provokes discussion about the types of biases that surround us: that we are under global technological surveillance is troubling; that the humans involved in crafting these systems, the systems themselves, and the people brought in to make final calls on various warnings, alerts, and arrests are all products of unconscious biases, is troubling. Perhaps software systems do not help us know the truth at all.

Mary Flanagan (US)Mary Flanagan (US) plays with the anxious and profound relationship between technological systems and human experience. Her artwork ranges from game-based installations to computer viruses, embodied interfaces to interactive texts. In her experimental interactive writing, she’s interested in how chance operations bring new texts into being. Flanagan’s work has been exhibited internationally at venues including The Whitney Museum of American Art, The Guggenheim, Tate Britain, Postmasters, Steirischer Herbst, Ars Electronica, Artist’s Space, LABoral, the Telfair Museum, ZKM Medienmuseum, and museums in New Zealand, South Korea, and Australia. She was awarded an honoris causa in design in 2016, was a fellow in 2017 at the Getty Museum, and in 2018 she was a cultural leader at the World Economic Forum in Davos, Switzerland.

Credits:

Thanks to Jared Segal, Kristin Walker, Danielle Taylor; open source RC software by Dr. Ron Dotsch.

Supported by: The Leslie Center for the Humanities, Dartmouth College