Manabe Daito (ph

Shizuo Takahashi. )

https://research.rhizomatiks.com/s/works/discrete_figures/credit.html

Tokyo-based artist, interaction designer, programmer, and DJ.

Launched Rhizomatiks in 2006. Since 2015, has served alongside Motoi Ishibashi as co-director of Rhizomatiks Research, the firm’s division dedicated to exploring new possibilities in the realms of technical and artistic expression with a focus on R&D-intensive projects. Specially-appointed professor at Keio University SFC.

Manabe’s work in design, art, and entertainment takes a new approach to everyday materials and phenomenon. However, his end goal is not simply rich, high-definition realism by recognizing and recombining these familiar elemental building blocks. Rather, his practice is informed by careful observation to discover and elucidate the essential potentialities inherent to the human body, data, programming, computers, and other phenomena, thus probing the interrelationships and boundaries delineating the analog and digital, real and virtual.

A prolific collaborator, he has worked closely with a diverse roster of artists, including Ryuichi Sakamoto, Björk,OK GO, Nosaj Thing, Squarepusher, Andrea Battistoni, Mansai Nomura, Perfume and sakanaction. Further engagements include groundbreaking partnerships with the Jodrell Bank Center for Astrophysics in Manchester, and the European Organization for Nuclear Research (CERN), the world’s largest particle physics laboratory.

He is the recipient of numerous awards for his multidisciplinary contributions to advertising, design, and art. Notable recognitions include the Ars Electronica Distinction Award, Cannes Lions International Festival of Creativity Titanium Grand Prix, D&AD Black Pencil, and the Japan Media Arts Festival Grand Prize.

[ART ACTIVITIES]

Manabe is an innovator in data analysis and data visualization. Notable artist collaborations run the gamut from “Sensing Streams” installation created with Ryuichi Sakamoto; performances of the ancient Japanese dance “Sanbaso” with prominent actor Mansai Nomura; and Verdi’s opera “Othello” as conducted by Andrea Battistoni. As a recent example, Manabe was selected for a flagship commission and residency program in 2017 at the Jodrell Bank Center for Astrophysics, a national astronomy and astrophysics research center housed at the University of Manchester. His close partnership with researchers and scientists concretized in “Celestial Frequencies,” a groundbreaking data-driven audiovisual work projected onto the observatory itself.

[MUSIC/DJ ACTIVITIES]

In 2015, Manabe developed the imaging system for Björk’s music video “Mouth Mantra”, and oversaw the production of AR/VR live imaging for her “Quicksand” performance.

In performance with Nosaj Thing, Manabe has appeared at international music festivals including the Barcelona Sónar Festival 2017 and Coachella 2016. Having also directed a number of music videos for Nosaj Thing, his work on “Cold Stares ft. Chance the Rapper + The O’My’s” was recognized with an Award of Distinction in the Prix Ars Electronica’s Computer Animation/Film/VFX division. Further directorial work includes the music videos of artists such as Squarepusher, FaltyDL, and Timo Maas.

As a DJ with over two decades of experience, Manabe has opened for international artists such as Flying Lotus and Squarepusher during their Japan tours. His wide repertoire spans from hip-hop and IDM to juke, future bass, and trap. Manabe has also been invited to perform at numerous music festivals around the globe.

[PERFORMING ARTS ACTIVITIES]

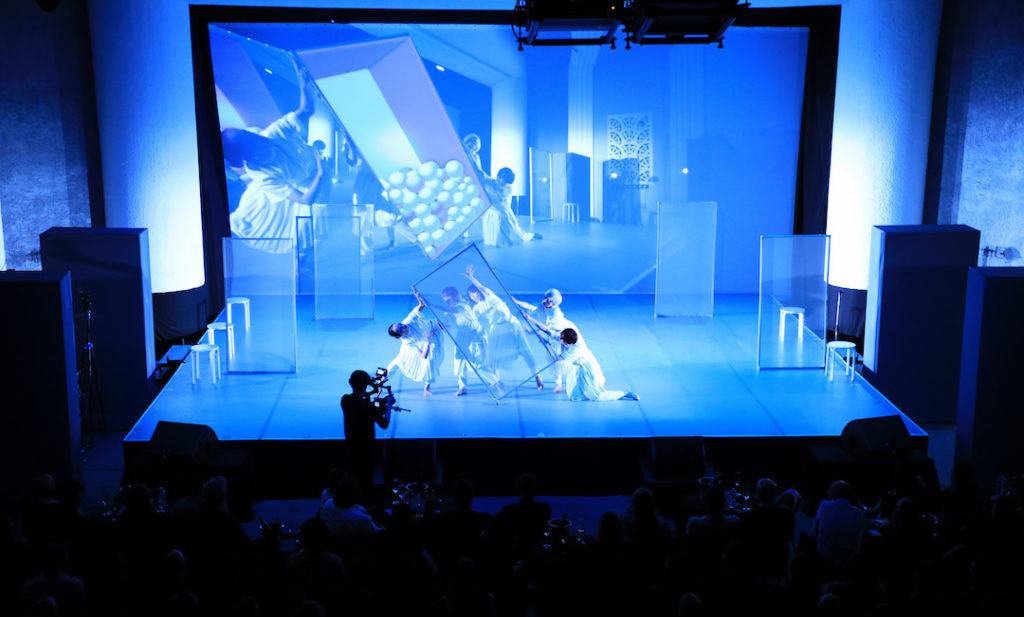

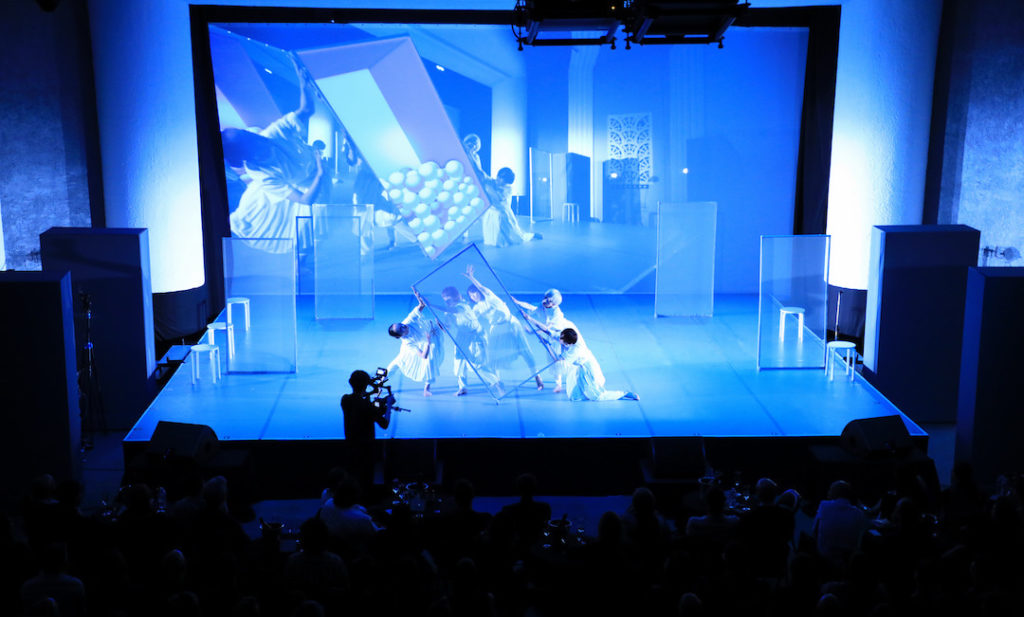

Manabe’s collaborations on dance performances with MIKIKO and ELEVENPLAY have showcased a wide array of technology including drones, robotics, machine learning, and even volumetric projection to create 3D images in the air from a massive volume of rays. Additional data-driven performances have explored innovative applications of dance data and machine learning. These collaborations have been performed at major festivals including Ars Electronica, Sónar (Barcelona), Scopitone (Nantes), and MUTEK (Mexico City) to widespread media acclaim (WIRED, Discovery Channel, etc.)

[EDUCATION ACTIVITIES]

Manabe is actively involved in the development and implementation of media artist summits (notably, the Flying Tokyo lecture series) as well as other educational programs (media art workshops for high school students, etc.) designed to cultivate the next generation of creators.

discrete figures’ explores the interrelationships

between the performing arts and mathematics,

giving rise to mathematical entities

that engage with the bodies of human dancers onstage.DAITO MANABE

Alan Turing applied mathematics to disembody the brain from its corporeal host. He sought to expand his body, transplanting his being into an external vessel. In a sense, he sought to replicate himself in mechanical form. Turing saw his computers as none other than bodies (albeit mechanical), irrevocably connected to his own flesh and blood. Although onlookers would see a sharp delineation between man and machine, in his eyes, this progeny did not constitute a distant Other. Rather, he was the father of a “living machine,” a veritable extension of his own body, and a mirror onto the act of performing living, breathing mathematics.

―Daito Manabe

Making of from the official site

https://research.rhizomatiks.com/s/works/discrete_figures/en/technology.html

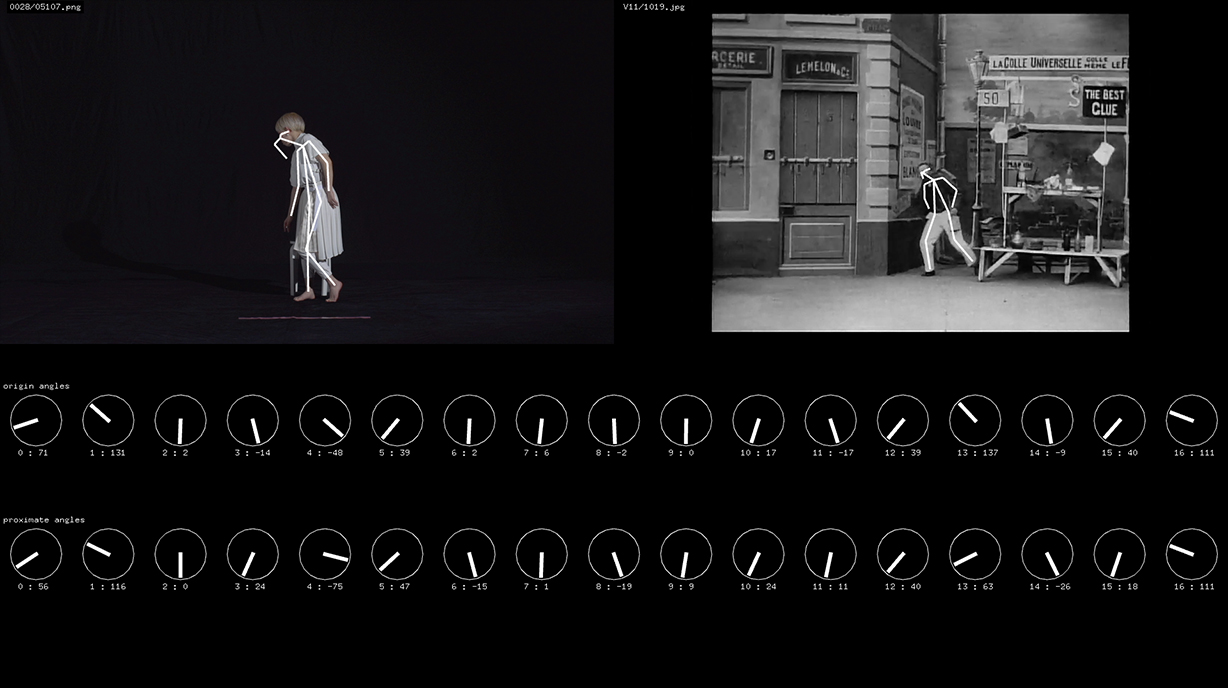

History Scene

Music 01

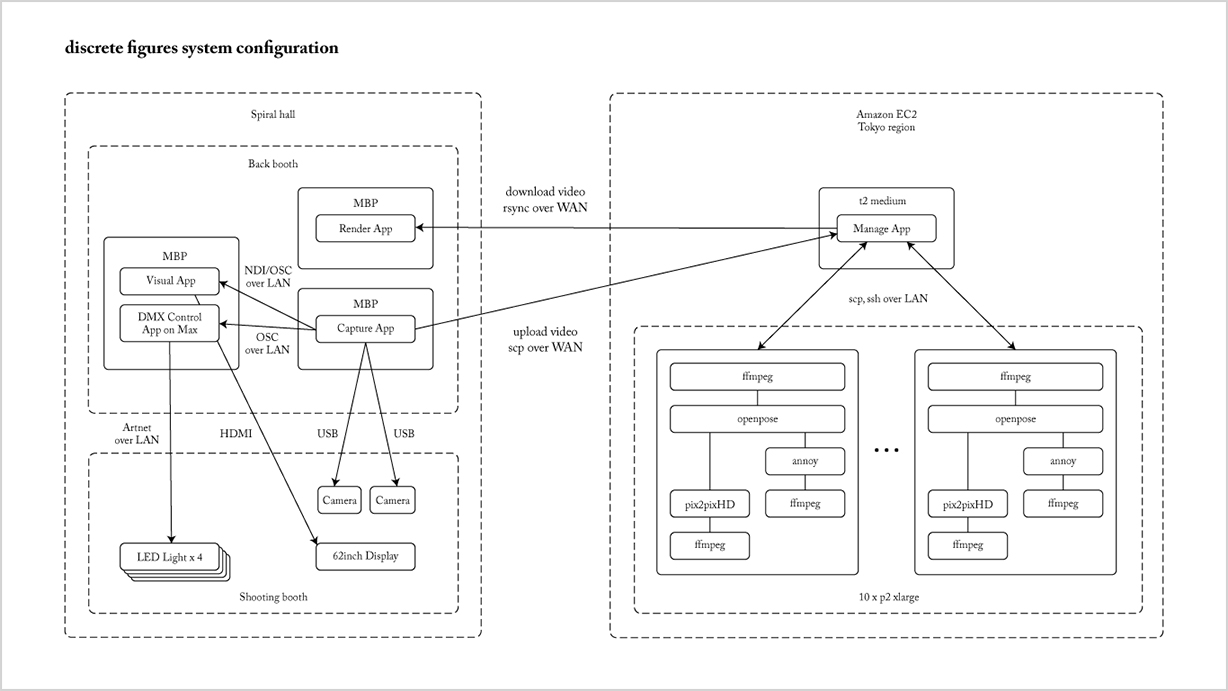

Using Openpose, we analyzed publicly available stage footage and poses from movie scenes, collected pose data, and developed a neighborhood search system that analyzes that data and pose data obtained from analyzing the dancer’s movements. Drawing from the actual choreography footage for this piece, we attempted to create a production utilizing video material with the closest pose on a per-frame level.

1 audience scene

Music 09

We set up a booth in the venue lobby and filmed the audience. Analyzing the participants’ clothing characteristics and movements on multiple remote servers right until the performance, we managed to feature the audience as dancers using that analytical data and motion data from the ELEVENPLAY dancers.

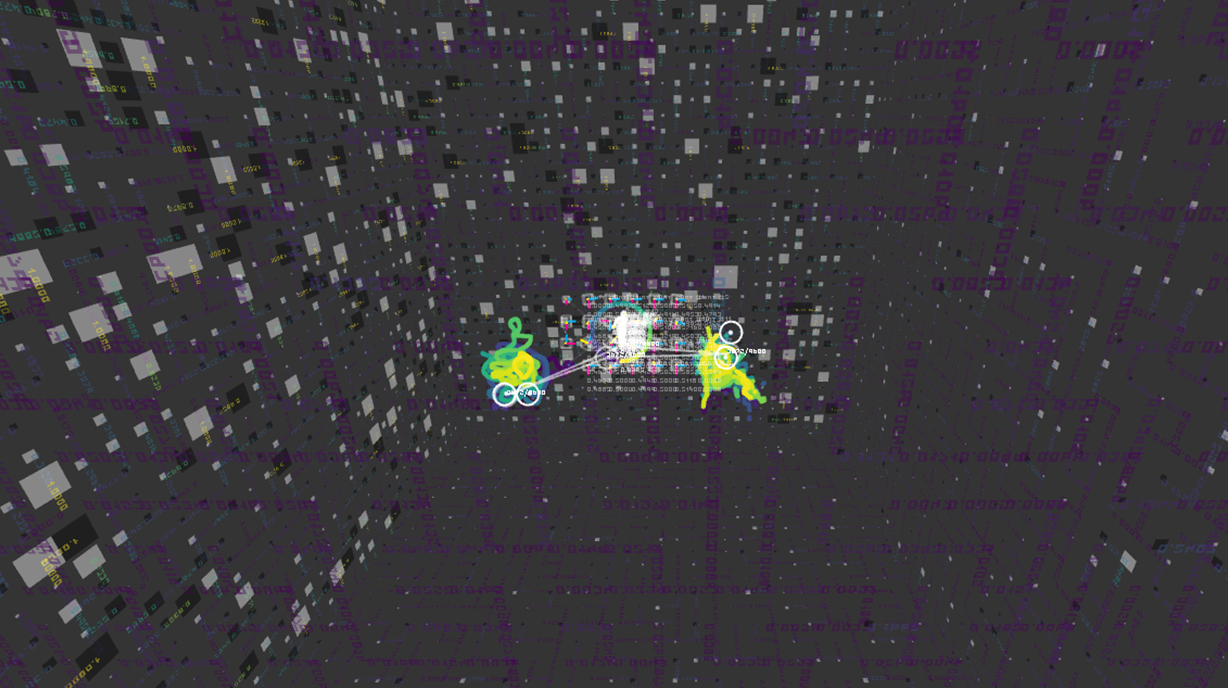

2 dimentionality reduction scene

Music 09

Using dimension reduction techniques, we converted the motion data to two-dimensional and three-dimensional ones and visualized it.

AI Dancer scene

Music 10

We were interested in dance itself, on the different types of dancers and styles, or how the musical beats connect to improvizational dances. To explore further we worked together with Parag Mital to create a network called dance2dance:

https://github.com/pkmital/dance2dance

This network is based on Google’s seq2seq architecture. It is similar to char-rnn in that it is a neural network architecture that can be used for sequential modeling.https://google.github.io/seq2seq/https://github.com/karpathy/char-rnn

Using the motion capture system, approximately 2.5 hours worth of dance data was captured about 40 times at 60 fps. Each session involved the dancers improvizing under 11 themes including joyful, angry, sad, fun, robot, sexy, junkie, chilling, bouncy, wavy, swingy. To maintain a constant flow, the dancers were given a beat of 120 bpm.

Background movie

This time we generated the background movie with “StyleGAN”, which was introduced in the following paper “A Style-Based Generator Architecture for Generative Adversarial Networks” by NVIDIA Research.

http://stylegan.xyz/paper

“StyleGAN” became open source. The code is available on GitHub.

https://github.com/NVlabs/stylegan

We trained this “StyleGAN” on NVIDIA DGX Station using the data we had captured from dance performance.

https://www.nvidia.com/ja-jp/data-center/dgx-station/

Hardware

Drone

For hardware, we used five palm-sized microdrones. Due to its small size, it is safer and more mobile compared to older models. Because of its small body, it provides a visual effect as if a light ball is floating on stage. The drones’ positions are measured externally via motion capture system. They are controlled in real time by 2.4 GHz spectrum wireless communication. The drone movements are produced with motion capture data that has already been analyzed and generated.

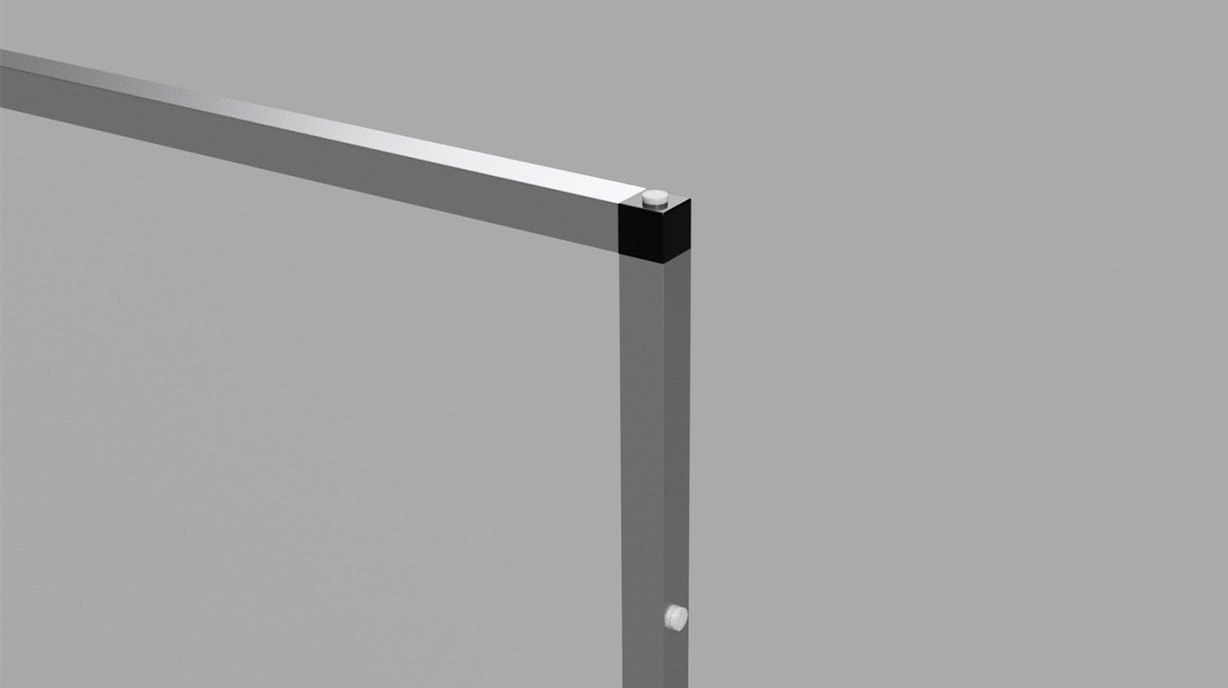

Frame

The frame serves an important role in projection mapping onto the half screen and AR synthesis. It contains seven infra-red LEDs, built-in batteries, and the whole structure is recognized as a rigid body under the motion capture system. Visible to the naked eye, retroflective markers are usually not that suitable for stage props use. However, we designed and developed a system using infrared LEDs and diffusive reflectors that allow for stable tracking invisible to the naked eye.

https://www.youtube.com/watch?time_continue=38&v=mHpLOO

htiks Research

https://research.rhizomatiks.com/